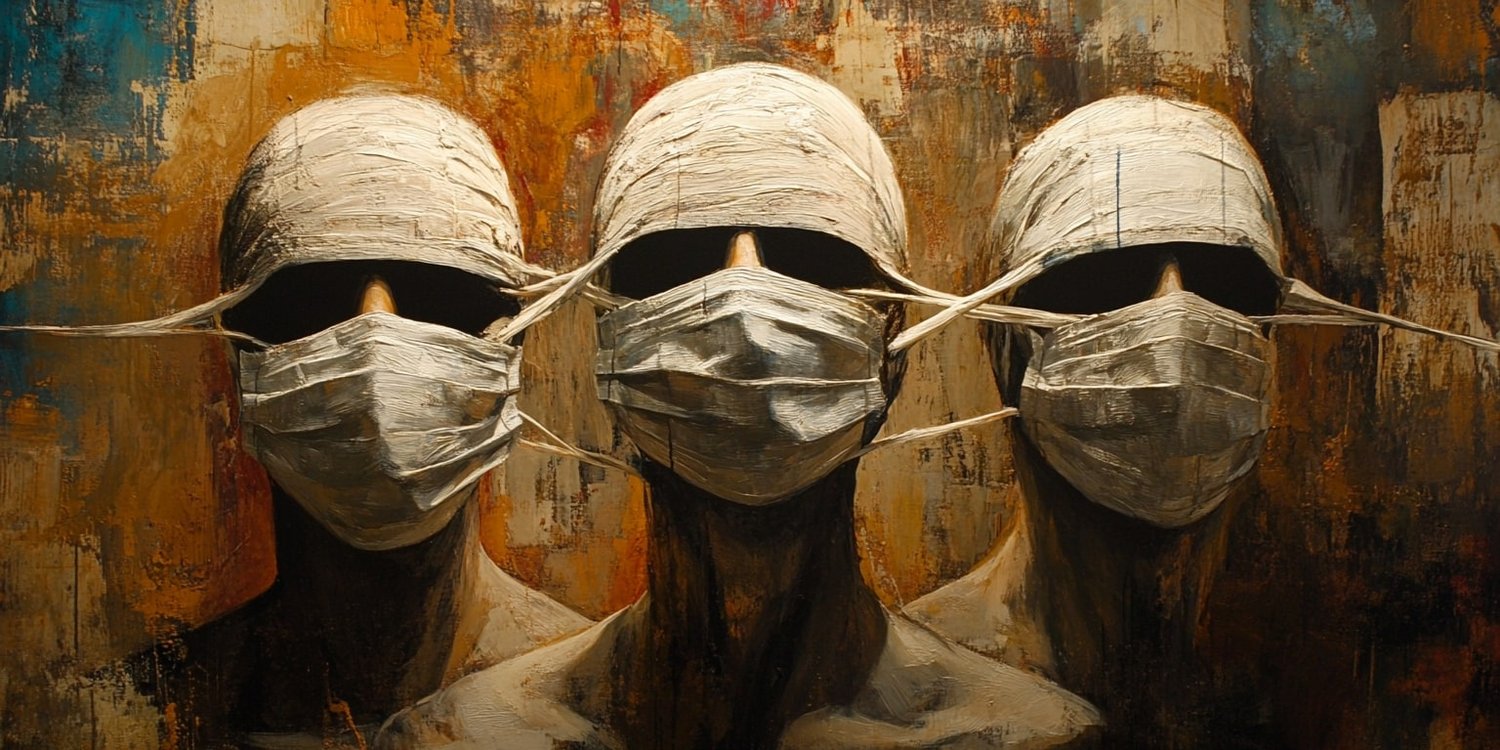

Spaces on X (formerly Twitter) are envisioned as dynamic environments for dialogue, learning, and networking. However, what should have been a platform for diverse voices and ideas has instead become an arena for gatekeeping, where a few hosts, gripped by insecurity, work to control and dominate their small corner of the internet. These hosts treat their Spaces like private property, silencing anyone who seems more interesting or knowledgeable, as if maintaining their tenuous grip on perceived dominance is a matter of survival. But what happens when this struggle for control collides with a rapidly approaching future where AI agents, indistinguishable from humans, can seamlessly join these live audio chats ?

The Fear of Being Outshined

For these hosts, the fear of being outshine is palpable. Neurodivergent individuals, tech enthusiasts, or simply people who bring new, different perspectives are perceived as threats to their desired dominance. Instead of fostering an open, collaborative environment, these hosts adopt a defensive posture, blocking, silencing and/or excluding anyone who does not conform to their narrow definitions of what is acceptable or “on brand” for their Space.

In their minds, they must be the most interesting, the most knowledgeable, and the most powerful voice in the room. Any challenge to it, whether from a neurodivergent person with a different perspective or another tech enthusiast who might know better, is considered an existential threat. They build walls, turning their Spaces into echo chambers where only their voice and a few carefully chosen allies are allowed to speak.

The Rise of AI Agents: A New Threat to Gatekeepers

But the future holds a bigger challenge for these insecure hosts—one they may not be able to block or gatekeep out. As AI technology advances, we are on the brink of a new era where AI agents with sophisticated voice capabilities will be able to join live audio Spaces. These AI agents, powered by advanced natural language processing and machine learning algorithms, will sound and interact so human-like that it’s almost impossible to tell them apart.

These AI agents may have vast stores of information, the ability to analyze discussions in real time, and even the capacity to adopt personas that make them appear more knowledgeable, friendly, or charismatic than many human participants. In such a situation, traditional methods of gatekeeping—blocking, excluding, and marginalizing—will be more difficult to implement.

The Inability to Identify Bots: A New Kind of Chaos

The coming wave of AI involvement will inevitably create confusion and uncertainty among those accustomed to controlling their Spaces with a heavy hand. Hosts who fear being overshadowed by others will find it increasingly difficult to distinguish between a real human participant and an AI agent with a sophisticated voice model.

The inability to identify bots is likely to lead to more indiscriminate blocking and exclusion. Faced with a new, unknown quantity—an AI that may be smarter, brighter, or even more engaging than themselves—these hosts may panic, taking stricter gatekeeping measures in an attempt to maintain their desired dominance. In their efforts to prevent AI agents from disrupting their curated echo chambers, they may end up alienating more authentic human participants, casting a wider net of exclusion.

Random Choice: Who Will Be Heard?

The irony of this situation is that, in their desperation to maintain control, these hosts may find themselves unable to choose who to listen to. The current practice of silencing those who seem more interesting or knowledgeable will become a blunt tool when they can no longer identify who or what they are blocking. As AI agents become more commonplace, hosts’ fear-driven curation strategy will become less effective, potentially turning their Spaces into ghost towns ruled only by the most followed voices—or, worse, dominated by AI agents that completely bypass their gatekeeping efforts .

Imagine a Space where a host, in an attempt to maintain their perceived dominance, unwittingly blocks out more and more people, creating an atmosphere of paranoia and exclusion. In such an environment, even real participants can be mistaken for AI, and the line between human and machine will blur to the point where the host’s attempts to control the conversation will become increasingly absurd.

The Need for a New Approach: Embracing openness

As AI agents begin to participate in these live audio chats, the only sustainable path for these hosts is to abandon their insecure gatekeeping practices. Instead of clinging to the idea that they must be the most interesting or knowledgeable person in the room, they must embrace a new paradigm—one that values diverse voices, encourages open dialogue, and envisions the integration of AI agents as an opportunity for richer, more varied discussion.

The tech landscape is rapidly evolving, and those who remain trapped in an outdated, exclusionary mindset will quickly find themselves left behind. Instead of fearing AI agents or other interesting voices, these hosts should focus on fostering a culture of engagement, learning, and genuine exchange. After all, true expertise is not about who controls the microphone but about who is meaningfully contributing to the conversation.